Unfolding IRT models : Do they always fit when expected?

Abstract

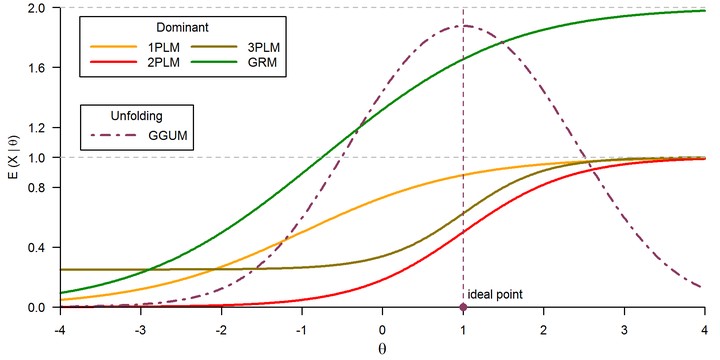

Item Response Theory (IRT) models are nowadays popular statistical tools in educational, psychological, and clinical assessment. However, the quality of the results based on IRT models crucially depends on attaining a minimum fit quality. Hence, it is important to assess which model best fits the data at hand. Two important classes of IRT models exist, namely the so-called cumulative and unfolding models. Unfolding models in particular are commonly suggested when measuring attitudes and preferences (as it is typically the case in clinical settings). However, little exploratory research has actually illustrated how well unfolding models outperform cumulative models in this type of context. Based on empirical data, we propose to see how well does the most popular unfolding model in use (the generalized graded unfolding model) fare against the more classical polytomous IRT cumulative models. We fit both cumulative and unfolding models to clinical questionnaires data. Results indicate that not always the most obvious model choice was the one that fit the data best. The most important implication is that practitioners need to be very careful when assessing model fit, in spite of what the apparent data structure at hand may suggest.